HPC Environment User Guide

This is an old user guide. The new one is located here:

https://version.helsinki.fi/it-for-science/hpc/-/wikis/home

0.0 What is HPC Environment For?

High Performance Computing platform, such as University of Helsinki Turso is an amalgam of multiple interconnected instances intended to provide computational muscle to turn demanding workloads into I/O bound workloads. We are frequently asked if X, Y or Z can be done on the HPC resources. This section answers these often asked questions.

What you can do

- You are required to have elementary understanding of Linux but may use the system to learn

- Graphical user interface is limited to JupyterHub, and VDI Turso.

- You may access and use your files from your laptops and other computers through NFS and Samba file shares.

- You may work on terminal, in interactive mode

- You can work on a batch mode

- You may work with exceptionally large I/O workloads and datasets

- You may work with serial processes

- You may work with heavy parallel processes

- You may work with GPU's

- You can use containers (any container convertible to run under singularity)

- You may do software development and debugging

- You may use wide range of pre-installed software, both Open Source and commercially licensed

- You may install and create your own software installations as long as elevated privileges are not required

- We may provide software packages, but do not provide training for their production use.

- You may create timed and automated workflows

- You may request resource reservations for courses etc

What you cannot do

- You cannot run Windows system or Windows software

- You cannot run GUI over X in meaningful speed (except VDI Turso virtual login node)

- You cannot have elevated privileges

- You cannot have dedicated resources and then keep them on idle

- You cannot use Turso for sensitive computing. Arkku however can be used.

- You cannot use docker, instead Singularity can be used

This user guide assumes elementary understanding of Linux. For your own benefit, take the time and familiarise yourself with basic concepts. It will take you a long way to really use the resources.

This guide will walk you through the HPC environment with examples and use cases but will not teach you Linux usage. We will help you as much as we can along the way.

1.0 Introduction

Welcome, we're glad you made it here. These documents guide you through the basics of the university high performance computing resources, services and related storage solutions.

If you are completely new with Linux, Please see CSC tutorial. From this point forward, we assume that you have.

Mandatory read for every user: Before you move on, please do read Lustre Best Practices.

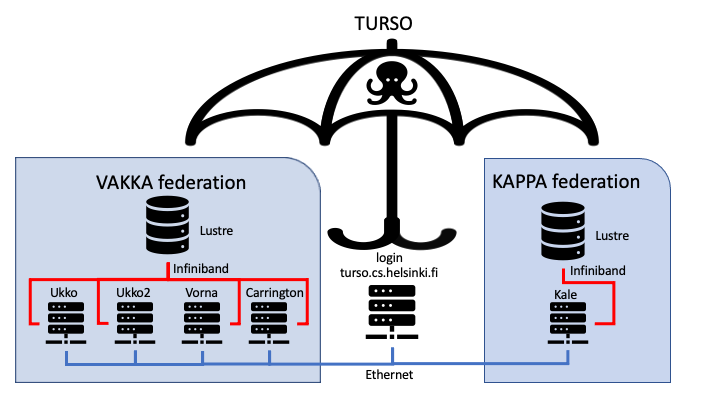

Turso (turso.cs.helsinki.fi) is a nested, federated cluster which includes all of the computational resources of University. Two major Lustre entities, Kappa, and Vakka form the backbone of the federation and computational elements are clustered around them. Login nodes (turso01..03), and JupyterHub serve as access terminals to the federations.

Vakka Federation is now member of Turso, and Vakka Federation includes four subclusters, Vorna, Ukko2, Ukko and Carrington.

Kappa Federation is a member of Turso at later time. Kappa includes Kale.

All Federation components are available through Turso login nodes.

Vakka is mounted to /wrk-vakka on Turso login nodes [ /wrk is a symbolic link to /wrk-vakka on Vorna, Ukko and Carrington compute nodes ]

Kappa is mounted to /wrk-kappa on Turso login nodes [ /wrk is a symbolic link to /wrk-kappa on Kale compute nodes ]

Make sure that you have the datasets available on either Kappa if using Kale nodes, or Vakka if using other nodes. For example data on Vakka will not be visible for processes on Kale. We will improve the federation selection process at the 2022Q1 and allow users to set environment and associations correctly.

Operating systems: All systems are running RHEL 8.x. In most cases, recompiling /relinking is sufficient.

1.1 Support Functions - If you have issues

Not everything will always go as planned, and because of this very reason we have very good support structure in place. In case of trouble, you can contact us in several different ways, depending on if you need face-to-face hands on help, or if your case contains information you do not wish to disclose publicly. We have included here the brief instructions of how to get most of our support. We also provide targeted training sessions for groups large enough.

1.1.1 HPC Garage - hands on, face-to-face (or zoom)

HPC Garage is every day event (weekends and holidays excepted), from 09:45AM to 10:15AM. Participation details are available for all university users. See you there.

1.1.2 Bug and Issue Reporting

University GitLab is used for issue and bug tracking. GitLab, unlike closed environments used in the past, allow users to see, contribute and search all open and closed bugs/issues on the environment. We believe this to be correct way, and know that this view is shared by many of our users.

For convenience, GitLab has issue/bug template(s) that can be used when creating new issue or bug. We strongly encourage use of GitLab.

GitLab issues are open for all to view and browse unless you explicitly set the issue to be private when creating it.

HPC Issues opened through GitLab will come to our attention directly, without delays.

1.1.3 Helpdesk

In the extremely unlikely case that HPC Garage or GitLab above do not work for you, then you may contact helpdesk which will then forward the ticket to us. This said, due to very closed nature of the Helpdesk ticket management system, we kindly require you to follow the guidelines to fill all necessary details (listed below in section 1.2.4) at the time ticket is filed. Failure to do so will cause delays in processing and will result ticket bounce, or excess delays in response. Helpdesk guidelines are available in Flamma.

1.2.4 Issue Reporting Guidelines

Title is the most powerful part of a ticket. Good title is clear, short and gives summary of the issue, preferably with component. Tag it for example with: [HPC] to instruct helpdesk to redirect to us. You can use free form severity indications if you like.

Example: [HPC] Vorna compute node vorna-340 has no modules available. Jobs landing on node fail

Description will elaborate the issue. Remember that information density is a key.

- Elaborated description of how the issue occurs, and how it affects your work?

- Is the issue continuously repeating, or intermitted?

- Provide running environment (for example loaded modules, what machine, multiple machines, versions)?

- Actual result you get (copy/paste error message)?

- What was the expected result?

- Add test case or instructions to reproduce the issue if at all possible.

- Attachments (code, logs etc that may indicate the issue)

- Your contact details

Example: Vorna compute node vorna-340 appears not to have modules available for $USER. My analysis crash every time when running on this node. I have loaded module GCC, but module avail shows none loaded on the node in the interactive session. I expected module avail to show GCC. You can verify this by starting interactive session on the node and attempting to load module GCC. There is no other error messages.

Finally two do's and one don't

Do read your report when you are done. Make sure it is clear, concise and easy to follow.

Be as specific as possible and don't leave room for ambiguity.

Do not include more than one issue in your report. Tracking the progress and dependencies of individual issue becomes problem when there are several issues in the report. If helpdesk ticket includes more than one issue, it will be bounced back due to restrictions of the Helpdesk ticket management system.

1.2 Consumable Resources

For batch ja interactive job scheduling, Time, CPU's, GPU's, Memory etc are referred as Consumable resources. When submitting batch or interactive job, you also set a limits for the consumable resources for the job to use. For example how long your job is, and how many CPU's it uses and so on. Depending on the request, the scheduler then goes about and finds the best possible slot to satisfy the resource request and allocates resources for the job to run on. Fewer resources requested, easier it is to find a suitable slot. If your process then exceeds any of the requested consumable resource limits, scheduler terminates the offending job without harming others.

Turso is a combination of several smaller clusters and therefore contains fair amount of differing hardware. To manage it all, and to make it as user friendly as possible, we have set meaningful system defaults. However, if you have very specific requirements, we number of options available.

Some hybrid requests are possible, but usually not recommended.

1.3 Fairshare Policy

We employ Fairshare policy on Turso. In practice it means that every user has a share of the system resources. Investor groups or other privileged groups may have larger share count than others, and while it does affect the job priority calculation, it will never cause single user(group) to trump others. A solid business case is needed for dedicated or privileged resource allocation because it is destructive to the general use.

Fairshare also implies that if the system is empty, single user can have all the resources for a time, until other users submit jobs. Fairshare 'credits' are charged from the resource reservation, not actual use.

1.4 Runtime directories

Only some specific directories are mounted on the compute nodes. Home directory can now be used to store data in regular basis, and there are no longer strict quota limits. Eg. $HOME and former $PROJ have been combined. Home directory is located at:

$HOME

1.4.1 Project directory deprecation

User application space located at /proj/$USER has been deprecated. All former /proj contents have been migrated to. There are symlinks from /proj/$USER → /$HOME/proj for old users. We do not create symlinks or /$HOME/proj folders for new users.

$HOME/proj

Working directory and for scratch data on the compute node is /wrk. This directory points to either /wrk-vakka, or /wrk-kappa, depending on the cluster you work on. To make these compatible without changing scripts you can use --chdir=/wrk/users/$USER in the batch script to change directory to local working directory.

Working directory environment variables have been removed, because they cannot be set in reasonable way to resolve different directory structures of the compute nodes.

2.0 Access

Turso has number of load-balanced frontend nodes, which are identical and serve as the login host. In case of multiple ssh sessions, load balancer attempt to place your new session to the same host you have previously logged in but this is not guaranteed. Behind the frontend nodes are the computational resources which are accessible through slurm. Frontend nodes are not intended for computation.

2.1 Applying for Account

You do not have to request access separately to each of the Federation. Access to Turso grants you access to all of the clusters resources by default. You may have different share count on different clusters.

Please note that it might take up to 2 hours after you have been added to either group before your home directory gets created. If you do not have access, but would like to have one, please request the manager/owner of your IDM group to send note to helpdesk(at)helsinki.fi, specifying which group needs to have access to the clusters. Please note that keeping the IDM groups up to date is the responsibility of the group owners.

2.3 Accounts for Courses

If you are organiser of course, students can have access to cluster resources (CPU, GPU, storage, group directories etc) by easy principle. Course organiser creates an IDM group for the course, and then adds the students to the group. Once group has been created, all members (existing and new) will have access to the cluster within minutes.

Once you have suitable IDM group for the course, contact us at helpdesk, include the IDM group name, and also inform wether you also need group directories and we'll set up the rest.

Following information is required:

- idm group name

- group directory name (defaults to idm group name)

- User name of the owner of the group directory (mandatory)

2.4 Users without Research Groups (students and other associates)

In case student needs an access to the clusters, procedure is same as above. Students need to be member of IAM/IDM group, which we will then add to the cluster users. This is required because we can then link students to individual responsible for the access management.

Students and other associates will need to have supervisor to create the group and add students, or other associates to the IDM/IAM group. Supervisor is then responsible for the group members work on the systems.

Note: This is subject to change in future, and we will grant access by default, we have obligations to manage the chain of responsibility in event of disaster or other causes that require decision making (for example student or other user leaving large quantities of data at the time of departure etc.).

2.5 Direct Access

Users can log in to Turso with ssh from within university domain. For example:

ssh -YA username@turso.cs.helsinki.fi

Connections are only allowed from the helsinki.fi domain. VPN or eduroam is not sufficient. To access Turso outside of the domain (e.g. home), add following to your ~/.ssh/config:

Host turso.cs.helsinki.fi

ProxyCommand ssh username@melkinpaasi.cs.helsinki.fi -W %h:%p

Note: with OpenSSH versions > 7.3, you can substitute the ProxyCommand with more human readable line: ProxyJump melkinpaasi.cs.helsinki.fi

Moving files from your home computer (Linux) to Turso outside the university network could be done like this:

~$ rsync -av --progress -e “ssh -A $user@pangolin.it.helsinki.fi ssh” /my/directory/location $user@turso.cs.helsinki.fi:/wrk/users/user/destination

In Windows, use WinSCP On the launch screen click “Advanced” and setup an SSH tunnel there via e.g. pangolin.it.helsinki.fi then login to turso.cs.helsinki.fi on WinSCP launch screen. When the connection is established, you can drag and drop your files between your computer and Turso.

2.6 VDI Turso

VDI, an abbreviation from term Virtual Desktop Infrastructure, offers remote desktops running in virtual machines. Among the Windows and Cubbli Linux machines are now vdi-turso hosts. These hosts are similar to turso login nodes with an exception that they also include traditional desktop software (with GUI) and a VDI agent. Hence this is a way to use a login node with a desktop experience. You can use tools such as Phy GUI quite fluently from VDI machines. Same applies to many other visualization toolkits where you would think of using X based application.

2.7 Jupyter Hub Access

JupyterHub runs on hub.helsinki.fi, which has a web based user interface. To access the Hub, and create a new session for yourself, please follow the instructions given in JupyterHub User Guide.

3.0 Development Environment

You can do development work on the interactive resources. Using utilities such like GNU screen or tmux you can keep your sessions alive even if you temporarily log out. Note that because some nodes have different CPU architecture, it is usually better to use interactive session on the specific sub cluster you intent to run the code.

You can open interactive session for development & debugging in any of the resources. There are no restrictions that resource is only usable in batch mode.

3.1 Software Modules

Development environment and most of the scientific software are managed by Modules (lmod). Environment modules allows multiple different versions of compilers and software packages without interference from each other. Modules also automate package relations and dependencies. By typing following, you will get a list of all installed modules:

[user@turso ~]$ module avail

Example to load default version of Python

[user@turso ~]$ module load Python

We see with command:

module list

That not only does it load default Python version, but also many other modules, which have dependencies to Python. See more details from Module Environment user guide. Module man page is also good source for quick reference:

[user@turso ~]$ man module

3.1.1 RHEL8 Module repository

RHEL8 modules are set as default for all users.

3.1.2 Centos7 Legacy Module repository

Centos7 Legacy modules are available until further notice, but they are not available as defaults. You will have to explicitly set the module environment to use/unuse the Legacy modules:

module [unuse|use] /appl/modulesfiles/all

Note that you may not wish to use default modules if you opt for legacy modules due to possible conflicts. Should this be the case, unuse the system defaults and other unwanted module repositories you may have enabled.

3.2 Open Source Tools

Turso has a standard suite of Open Source development tools and libraries. Various versions of development tools are available as modules.

3.3 Licensed Tools

Some Licensed tools, compilers and libraries are also available.

3.3.1 Intel Parallel Studio XE Cluster Edition

At the moment two concurrent floating licenses of Intel Parallel Studio XE Cluster Edition are available for University. Licenses are served from license server concession-2-18.it.helsinki.fi (TCP 27009) and they are also available through module environment in the computational clusters.

3.3.2 Parallel Studio Tools for Students educators and Open Source Developers

Students, open source developers and classroom educators (in class) are eligible for free Intel development tools. You can get your free license and associated tools directly from Intel.

3.4 Debugging & Versioning

Valgrind, GDB and git are installed as a default to all compute- and login nodes.

3.5 Compiling

Users can compile codes on the login node(s). Each login node has the libraries necessary for compilation for all the architectures available. Should there be missing headers or libraries, please do let us know asap. Compute nodes are stateless, and as such the development libraries and tools are mostly omitted.

Note that Turso will have Intel and AMD architectures, and codes will need to be specifically compiled against the intended architecture for best performance.

3.5.1 Static vs. Dynamic linking

There are number of benefits in dynamic linking. However, for scientific software and HPC workload it is often far better idea to link programs statically. Somewhat larger binaries and greater memory consumption are not significant factor and are outweighed by the benefits of portability and distribution. Comparing to the size of datasets, neither takes significant percentage of the whole.

Static linking does have several notable benefits in scientific computing:

- Portability - This is the single most important reason in the heterogeneous cluster federation. You can run your code without need to recompile it for the precise node configuration you are going to execute it in.

Of course you will still need to make sure that your architecture is compatible, and that kernel version differences are not too far apart etc. - Ease of distribution - You can easily share your compiled binary with your colleagues.

- No longer cases of not finding libraries.

That said, we do not oblige that every program is statically linked, but it is viable alternative especially when you need to make sure that your programs have portability across the various hardware combinations of Turso.

4.0 Runtime Environment

4.1 Standard

All compute nodes are stateless. Standard runtime environment is x86 RHEL 8.x Linux. Operating system is subject to change. See exact version with

uname -a

4.2 Interactive Sessions

Slurm upgrade has changed the way interactive sessions work. The "interactive" command is no longer in use. Instead user needs first to create an interactive reservation for resources (eg. you can imagine it as creating a virtual playground sververved for your processes alone). This line should contain the resources you would like to have (any resource can be chosen for interactive use), eg. number of cpus (-c), number of tasks (-n), memory (–mem=), time (-t), partition (-p) and cluster (-M). For example. Note that the values are just examples, do not copy them straight out unless you really need what is listed:

srun --interactive -n 4 --mem=4G -t 00:10:00 -p short -M [ukko|vorna|kale|carrington] --pty bash

This starts an bash shell, which has at this point a single cpu core at your disposal. Then inside the bash shell, to claim the reserved resources you would have to specify.

srun -n4 --mem=1G -M [ukko|vorna|kale|carrington] <your executable here>

Without the --interactive option your subsequent process placement srun call will not work, and subsequent srun would fail without any error, it would just hang there forever, or until time limit, whichever comes first.

4.2.1 X11 for Visualisation

If you wish to use X applications with cluster, the most convenient way would be to use VDI machine called vdi-turso. This is a visual virtual login host that has native components that allow X applications to work from compute nodes of Kappa federation.

You may use applications such as Matlab easily in visual mode on the compute node, by launching interactive session with --x11 option.

4.2.1.1 X11 From login nodes

X11 forwarding support is included on the interactive sessions with following option:

--x11

4.2.2 Interactive GPU development

We have some oversubscriptive resources. From the slurm side the oversubscription applies to the cpu's. Slurm cannot allocate gpu resources in reasonable way in shared mode, and hence we have used little tweaks to get this to work. Oversubscription is used to increase the number of CPU's users can use, since their limited number quickly becomes a limiting factor in gpu development and other shared GPU use.

We have then 4:1 oversubscription for CPU's, and one GPU on a node for shared use. Three other GPU's on the same node can be requested by regular means, through same queue, if/when exclusive GPU is needed. Following examples show how to use them.

In order to use shared GPU for development or other interactive use you can use following example.

srun --interactive -c4 --mem=4G -t04:00:00 -pgpu-oversub -Mukko --pty bash

Note that this does not automatically set the CUDA_VISIBLE_DEVICES environment variable. If needed, you can set it by adding above an option:

--export="ALL,CUDA_VISIBLE_DEVICES=0"

Here's an example when you would like to have entire GPU for exclusive use from the oversubscriptive partition. Note that you have to specify -G for number of GPU's. If not specified, slurm will only allocate you the unlisted GPU, and CUDA_VISIBLE_DEVICES varialble will not be set:

srun --interactive --mem-per-gpu=4G --cpus-per-gpu=4 -t04:00:00 -pgpu-oversub -G 1 -Mukko --pty bash

4.3 Batch jobs

Batch jobs are submitted with a script which acts as a wrapping around the job. Batch script provides scheduler with instructions necessary to execute the job on a right set of resources (as in interactive session, inside your virtual playground). As in the interactive session, environment variables are inherited from the original session. Below we'll create simple example batch job that does not do a whole lot, but gives an idea how to construct batch scripts.

Following example we have a job that requests 1 core and 100M of memory for 10 minutes and placement in a test queue. At the end of the script, ordinary unix commands are used to start the program(s), scripts etc. You can execute pretty much any Linux script or command you would be able to execute on a login node. Only practical exception is that you cannot easily start deamons through the batch job.

Batch script has a specific syntax. It starts with shebang (#!/bin/bash) and the batch parameters have to be set in the script before the actual payload. In the example below, you can use your favorite editor instead of vi.

vi test.job

Type the following in the file test.job. Note that you can use various environment variables to control your directory structures. For example for output %j provides jobid at the end of the output file, while you can use $SLURM_JOB_ID environment variable on the payload part to create directory for temporary files associated to this jobid.

Documentation of complete list of usable environment variables.

Structuring I/O operations will significantly reduce a risk of accidental concurrent file operations and also improve performance. Simple analogy: One pen, one paper, one author will probably get things done sensibly. One pen, one paper and 100 authors all attempting to use same pen to write will probably turn out to be either mess, or rather slow. 100 pens, 100 papers and 100 authors will turn out to be pretty fast, but only if everyone is writing their own story.

If you need 100 authors to write a single larger story, then you would have to think truly in parallel...

Always bear in mind these rules for any file operations on any parallel filesystem, or filesystem accessible from multiple possible hosts simultaneously:

#!/bin/bash #SBATCH --job-name=test#SBATCH --chdir=[/wrk-vakka/users/$USER/<directory>|/wrk-kappa/users/$USER/<directory>]#SBATCH -M [ukko|vorna|carrington|kale]#SBATCH -o result-%j.txt #SBATCH -p test #SBATCH -c 1 #SBATCH -t 05:00 #SBATCH --mem-per-cpu=100 mkdir temporary-files-$SLURM_JOB_ID // This is the actual payloadtouch temporary-files-$SLURM_JOB_ID/tempfile // This is the actual payload hostname // This is the actual payload sleep 60 // This is the actual payload

Following command submits the test.job into the system and , and the scheduler takes care of the job placement (sbatch accepts additional options on the command line, and they take precedence over anything on the batch file):

sbatch test.job

After the job executes, it creates an output file result.txt where the output is written. Above prints out the hostname the job was executed in - and then goes on to wait for 60 seconds.

Note that -p option takes multiple arguments, comma separated. Eg. You are eligible to do "-p short,long" for example to allow job to execute in either short or long queues, assuming that job does not exceed the queue max time limit.

4.4 How to request GPU's

Below an example script for GPU usage, assuming single GPU (note that for this you need to use -G 1), two cores and 100M of memory to be used for a default time (Cluster ukko2 nodes have 8 P100 GPU's, and ukko3 nodes have DGX1 with 8 V100 GPU's, and 8 A100 GPU's):

A100 GPU's require minimum of CUDA 11.x or newer to operate properly.

#!/bin/bash #SBATCH --job-name=test#SBATCH --chdir=[/wrk-vakka/users/$USER/<directory>|/wrk-kappa/users/$USER/<directory>]#SBATCH -M [ukko|kale] #SBATCH -o result.txt #SBATCH -p [gpu|gpu-short] #SBATCH --cpus-per-gpu=2#SBATCH --constraint=[p100|v100|a100] #SBATCH -G 1 #SBATCH --mem-per-gpu=100M mkdir temporary-files-$SLURM_JOB_ID touch temporary-files-$SLURM_JOB_ID/tempfile srun hostname srun nvidia-smi

4.5 Setting up e-mail notifications

You can set up e-mail notifications for batch job. If set, changes in the job status will be sent to specified user. Default is the user who submits the job. Most commonly chosen mail options are: NONE, BEGIN, END, FAIL or ALL. To set the option, following line is needed in the batch script. Multiple options can be set as comma separated list:

#SBATCH --mail-type=<option>,<option>

User may also specify mail address other than default:

#SBATCH --mail-user=<mail@address>

4.6 Environment Variables and Exit Codes

If you need only some environment variables to be propagated from your original session, or none, you can choose export option (default is ALL):

--export=<environment variables | ALL | NONE>

Job will inherit working directory from the job launch directory unless specified differently with "--chdir". This is important to note when you work with both vakka and kappa.

When batch job is submitted and launched, Slurm sets number of environment variables which can be used for job control. Standard linux exit codes are used at job exit. Please see this page for a full compendium of the variables and error codes.

4.7 Job Control and Monitoring

You can overlap another interactive job to existing batch job or interactive session if you need to check from the node what the job is doing. This is handy option for debugging. Overlapping job is subject to the limits of the parent job.

srun --jobid <jobid> -M <cluster> --overlap --pty bash

When a job s submitted, you can use following commands to view the status of the queues, and change the job status if needed.

General system status, you can use

slurm <options>

Note that you get full range of options by issuing command without options. Slurm command has been ported from Aalto to function with federations, which means you may require -M <cluster> option.

Show queue information:

sinfo -l

If you want to cancel your job:

scancel -M [vorna|ukko|carrington|kale] <jobID>

Traditional view to your own jobs:

squeue -M [vorna|ukko|carrington|kale] -l -u $USER

For information about a job that is running:

scontrol -M [vorna|ukko|carrington|kale] show jobid -dd <jobID>

For information about a completed job's efficiency. Output of seff is automatically included in the end of job mail notifications, if notifications are set to be sent in the batch script.

seff -M [vorna|ukko|carrington|kale] <jobID>

Accounting information, and job history can be obtained with sacct command which supports many options (see man sacct for exact details of options):

sacct --allocations -u $USER -M [vorna|ukko|carrington|kale]

Job control summary of less common commands:

Command | Description |

|---|---|

sacct | Displays accounting data for all jobs. |

scontrol | View SLURM configuration and state. |

sjstat | Display statistics of jobs under control of SLURM (combines data from sinfo, squeue and scontrol). |

sprio | Display the priorities of the pending jobs. Jobs with higher priotities are launched first. |

smap | Graphically view information about SLURM jobs, partitions, and set configurations parameters. |

There is a comprehensive Slurm Quick Reference & Cheat Sheet which can be printed out

4.8 Federations, Queues & Partitions

Turso is a federation of multiple clusters. You can check the currently active members with sacctmgr command:

sacctmgr show federation

Several queues / Partitions are available on each of the federation members under Turso. You can see the overall view of the clusters with:

sinfo -M all

You will have to choose cluster and partition when running jobs on the federation. Default cluster is Vorna, and partition is short. Unless stated differently with -M flag, jobs will only queue and run in Vorna.

-M <cluster name(s), comma separated>) -p <partition name>

You can choose multiple clusters as targets, for example:

-M ukko,vorna -p short

If you do, then system attempts to place job in both, but will only run on first available cluster. As a result you will see duplicate job entires on squeue output, one that shows running and one which shows revoked. Latter is a placeholder only.

Informative commands, such as scontrol, squeue, sinfo and others require specification of -M [clustername|all].

4.8.1 Cluster Profiles

Kale Long serial jobs and moderate parallel jobs for up to 14 days, large datasets for all users and VDI visualization option

Ukko Short serial jobs and moderate parallel jobs up to 2 days

Vorna Large parallel jobs

See exact queue details below.

4.8.2 Partitions - Vorna

Vorna is profiled for large parallel jobs. We advice to use Kale for long running serial jobs.

Vorna Partitions/Queues | Max Wall Time | Qty of Nodes | Node Memory | Note |

|---|---|---|---|---|

short | 1 day | 148 | 57GB | Optional: --constraint=[intel] |

medium | 3 days | 148 | 57GB | Optional: --constraint=[intel] |

test | 20 min | 180 | 57GB | Optional: --constraint=[intel] |

4.8.3 Partitions - Carrington

Carrington Partitions/Queues | Max Wall Time | Qty of Nodes | Node Memory | Note |

|---|---|---|---|---|

short | 1 day | 10 AMD | 496GB | Restricted |

long | 7 days | 10 AMD | 496GB | Restricted |

4.8.4 Partitions - Ukko

Ukko is a profiled for fast turnaround parallel and serial jobs.

Ukko Partitions/Queues | Max Wall Time | Qty of Nodes | Node Memory | Note |

|---|---|---|---|---|

gpu | 1 days | 1x (4x A100) | 496GB | Optional: --constraint=[v100|a100|p100] |

gpu-long | 3 days | 1 (4xP100) | p100 VRAM: 16GB | Optional: --constraint=[p100] |

gpu-oversub | 4 hours | 2 (4x A100) | p100 VRAM: 16GB | Optional: --constraint=[a100|p100] |

short | 8 hours | 16 AMD | 496GB | Optional: --constraint=[amd] |

medium | 2 day | 16 AMD | 496GB | Optional: --constraint=[amd] |

bigmem | 3 days | 3 Intel | 1.5TB - 3TB | Optional: --constraint=[intel] |

short | 1 day | 32 Intel | 250GB | Optional: --constraint=[intel] |

medium | 2 days | 32 Intel | 250GB | Optional: --constraint=[intel] |

4.8.5 Partitions - Kale

Kale is profiled for long parallel and serial jobs.

Kale Partitions/Queues | Max Wall Time | Qty of Nodes | Node Memory | Note |

|---|---|---|---|---|

short | 1 day | 42 | 381GB | Optional: --constraint=[avx,intel] |

medium | 7 days | 26 | 381GB | Optional: --constraint=[avx,intel] |

long | 14 days | 29 | 381GB | Optional: --constraint=[avx,intel] |

bigmem | 14 days | 1 | 1.5TB | Optional: --constraint=[intel] |

test | 10 min | 1 | 381GB | Optional: --constraint=[avx,intel] |

gpu | 7 days | 3 | VRAM 16/32GB | Optional: --constraint=[v100 | v100bm] |

gpu-short | 1 day | 1 | VRAM 16GB | Optional: --constraint=[v100] |

5.0 Storage Solutions

5.0.1 Directory permissions and permission management

Default umask for $HOME and /wrk-kappa/users/$USER, /wrk-vakka/users/$USER is 700. This means that only $USER has read-write-execute permissions. These defaults can be changed by $USER. However, before opening the permissions to whole world, think of what is the widest audience you need to grant permissions to your directories.

Usually it is enough to grant permissions to your research group (idm-group that is). You can change the group owner with chgrp command, and then set the umask accordingly.

We strongly suggest not to open the directory persissions for everyone to read, especially when the group owner is hyad-all, which includes all users.

5.1 Lustre

Our working directory is based on Lustre. User guide and other details are readily availble, including in depth documentation. Lustre is a very scalable, very fast parallel filesystem. Lustre powers /wrk-kappa/users/$USER, /wrk-vakka/users/$USER and is usable by all users as a default. Please make sure you familiarise yourself with the cleaning policy.

5.2 Helsinki University Datacloud, CSC's Allas and IDA object storages

Ceph is a object storage service for research data. It allows sharing with S3 or swift. You can access these resources with rclone.

5.2.1 Datacloud

Use these instructions to setup rclone for Datacloud access: Uploading large data sets to Datacloud via WebDAV

Before the Datacloud content listing test (rclone ls datacloud![]() , run these commands:

, run these commands:

unset https_proxy

unset HTTPS_PROXY

If you are running a batch job where a connection to Datacloud is needed, you should include the two unset commands in your batch job script. Check that your Datacloud contents is now listed properly with rclone (Ctrl-C to break if the list is long):

rclone ls datacloud:

You can now use other rclone commands (rclone copy etc.).

5.2.2 CSC's Allas

Allas documentation is here: https://docs.csc.fi/data/Allas/

wget https://raw.githubusercontent.com/CSCfi/allas-cli-utils/master/allas_conf

source allas_conf --user <YOUR_CSC_ACCOUNT_USERNAME> --project <PROJECT_ID>

You can get your project ID for example from https://my.csc.fi

When successful the configuration script will let you know that the connection to Allas will be open for 8 hours. You should now be able to view your content in Allas by:

rclone lsd allas:

You can now use other rclone command with Allas (rclone copy etc.)

In all other questions concerning Allas please contact CSC's helpdesk at servicedesk@csc.fi

5.2.3 CSC's IDA

IDA is CSC's storage service for long-term storage. You can use IDA anywhere on turso or University of Helsinki systems where you have write access. You do need a CSC user account and an existing project on CSC that has IDA access enabled. These details are required in all IDA transfers. After that you can install CSC's command line tools from this repository: https://github.com/CSCfi/ida2-command-line-tools

You can also work with IDA with rclone. Before start, make sure you comment away or remove these command from your pipeline/script:

unset https_proxyunset HTTPS_PROXY

If you already run these commands during your session the easiest way to get them back is to logout from Turso and then re-login.

rclone config

Then proceed similarly as in 5.2.1 for Datacloud with the following changes:

The server name is: https://ida.fairdata.fi/remote.php/webdav/

CSC recommends using an app token (which must be already defined for your IDA project) instead of your CSC password when the rclone config asks you to input the password for the new IDA service (c.f. https://www.fairdata.fi/en/user-guide/#app-passwords).

If you want, you can define each project in IDA as a separate, distinct rclone service, e.g. 'ida-2003683' and 'ida-2003505' with project specific app tokens specified for each rclone service accordingly, etc.

IDA also has a graphical user interface at https://ida.fairdata.fi/login

In all inquiries concerning IDA, please contact CSC's helpdesk at servicedesk@csc.fi

5.3 NFS mounted filesystems

NFS mounted disks can be found associated primarily for various projects.NFS mounted directories must not be used as runtime scratch because of excess traffic it causes. Only exception is if you are using databases. NFS powers $HOME and module repository on all cluster components. NFS mounted directories are global across clusters.

$HOME is intentionally very small at this moment due to server EOL. Please make sure that you do not store data there. Always make sure that you relocate cache and other temporary data to /wrk-kappa/users/$USER, /wrk-vakka/users/$USER.

5.4 Group Folders

We will create group folders upon request. Group folders are created in two places to provide flexibility. For shared workspace you will have group folder in /wrk. This will provide you good I/O performance. Work group folder is also available through samba to your desktop:

/wrk-[vakka|kappa]/group/<groupname>

For sharing software or common datasets you will have NFS mounted group folder in $HOME/proj:

$HOME/group/<groupname>

I your research group needs a group folder, please create a request through helpdesk with following information:

- Name of the folder

- Owner of the folder (this individual is responsible for the use)

- IDM group

5.5 Directories available outside of the cluster

You can access your Vakka and Kappa working data from windows machines and Macs from following share:

\\turso-fs.cs.helsinki.fi\

Cubbli machines (workastations, jump hosts etc) will have NFS mount:

turso-fs.cs.helsinki.fi:/turso /home/ad/turso

Note that all non-IB connections require Kerberos ticket.

5.6 Filesystem Policies

- As of June 2023, unfortunately there's no automated backup of the HPC storage systems. Replicate your irreplaceable data to other systems, such as HU Datacloud or CSC (see 5.2.x) for your own safety. We intend to introduce limited backup (/home, /group) later on, but this is still in planning phase.

- /wrk-kappa/users/$USER and /wrk-vakka/users/$USER is always local to the federation (Kappa, Vakka)

- Working directories /wrk-kappa/users/$USER and /wrk-vakka/users/$USER provides very fast I/O performance and it has quota limit of 50TB.

- Application directory $USERAPPL points to a best location for programs, executables and source codes (deprecated).

- Temp directory variable $TMPDIR points to a best available location for temporary files on the current system.

- Home directory $HOME is only to store keys, profile files etc. No data storage there. Note that you can redirect most software to use other than ~/ as default for cache, temporary files etc. For this purpose, do use /wrk-kappa/users/$USER or /wrk-vakka/users/$USER. Note that $HOME has a very strict quota limit.

These policies are subject to change.

6.0 Common Special Cases

6.1 Python Virtual Environment

You can conveniently create yourself Python virtual environment. We'll be creating virtual environment in the example below, activate it and then install Tensorflow and Keras on top. Keep in mind that if you create virtualenv with one vision of Python, you will need to have the same version loaded if/when activating it later:

1. module purge (intent of module purge is to give a clean slate)

2. module load Python cuDNN

3. python -m venv myTensorflow

4. source myTensorflow/bin/activate

5. pip install -U pip

6. pip install tensorflow

7. pip install tensorflow-gpu

You can then add additional libraries etc..

8. pip install keras

9. pip install <any additional packages you wish to install...>

10. To deactivate the virtualenv, just type deactivate

6.2 Jupyter Notebook and JupyterHub

JupyterHub is a service which allows you to start interactive sessions on nodes. This allows you to use Jupyter Notebook without need to create them manually on the compute nodes. Access with web browser:

https://hub.cs.helsinki.fi/

6.3 Containers

At this time we have chosen Singularity as the preferred container environment and it is available on Vakka Federation compute nodes. You can build Dockers containers and run them under Singularity.

6.4 Spark

We will construct Spark entity. - DEFERRED

7.0 Scientific Software

We have installed number of scientific software packages to Turso. If at all possible, they are installed and available as modules and hence visible on the available module list. You can then easily load module combination you need.

Some of the packages require specific notes, and/or debugging information, and we do maintain FAQ about scientific software packages where some issues are expected or known.

If you encounter issues with any of the packages, would like to file a bug report or require specific software packages, please leave feature request ticket in GitLab.

8.0 Parallel Computing

Most components of Turso use low latency Infiniband interconnect, and therefore suit very well for parallel applications. Practical maximum size for single parallel job is about 1800 cores. If you need to run job of that size, we would advice to contact us especially if the general system usage is high.

Please see a separate page for parallel computing details.

9.0 HOW TO ACKNOWLEDGE HPC?

In science, funding is everything. In order to secure the continuity for our HPC resources, we have to be able to show the significance of HPC capabilities in our funding applications. It is urgent to add in the Acknowledgements section of your every paper the wording

"The authors wish to thank the Finnish Computing Competence Infrastructure (FCCI) for supporting this project with computational and data storage resources."

10.0 Additional Reading

Aalto University Triton User Guide

CSC's Taito cluster's documentation may be useful

Slurm Quick Reference & Cheat Sheet

Version 1.3

- This is an old user guide. The new one is located here:

- https://version.helsinki.fi/it-for-science/hpc/-/wikis/home

- 0.0 What is HPC Environment For?

- 1.0 Introduction

- 2.0 Access

- 3.0 Development Environment

- 4.0 Runtime Environment

- 5.0 Storage Solutions

- 6.0 Common Special Cases

- 7.0 Scientific Software

- 8.0 Parallel Computing

- 9.0 HOW TO ACKNOWLEDGE HPC?

- 10.0 Additional Reading